Abstract

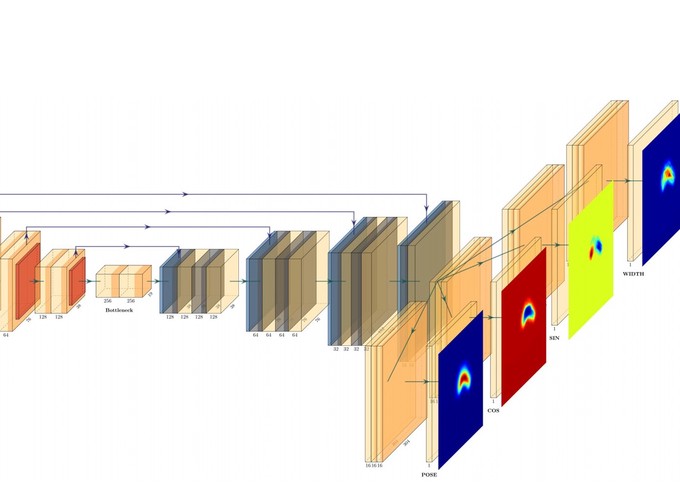

We consider the problem of robotic grasping using depth + RGB information sampling from a real sensor. we design an encoder-decoder neural network to predict grasp policy in real time. This method can fuse the advantage of depth image and RGB image at the same time and is robust for grasp and observation height. We evaluate our method in a physical robotic system and propose an open-loop algorithm to realize robotic grasp operation. We analyze the result of experiment from multi-perspective and the result shows that our method is competitive with the state-of-the-art in grasp performance, real-time and model size.

Type

Publication

In The 2019 Australian & New Zealand Control Conference