Abstract

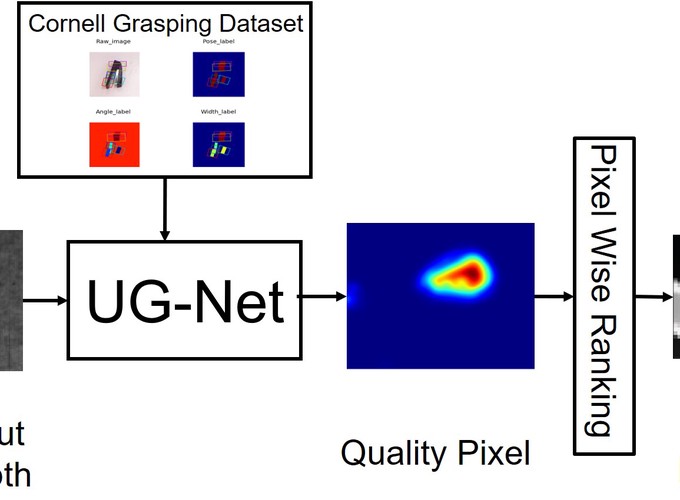

In this work, we present a real-time, deep convolutional encoder-decoder neural network to realize open-loop robotic grasping using only depth image information. Our proposed U-Grasping fully convolutional neural network(UGNet) predicts the quality and the pose of grasp in pixel-wise. Using only depth information to predict each pixel’s grasp policy overcomes the limitation of sampling discrete grasp candidates which can take a lot of computation time. Our UG-Net improves the grasp quality comparing to other pixelwise grasping learning methods, more robust grasping decision making within 27ms with 370MB parameters approximately (a light competitive version is also given).

Type

Publication

In IEEE International Conference on Real-time Computing and Robotics 2019